Never too rich or thin: Compress SQLite to 80%

We're big fans of using SQLite for anything even of moderate complexity where you might otherwise use a file. The benefits are many, but sometimes you want to rely on file storage. [Phiresky] has a great answer to this: the sqlite-zstd extension provides transparent row-level compression for SQLite.

Of course, there are other options, but as the article mentions, each of them has its drawbacks. However, by compressing each row of an array, you can retain random access without some of the drawbacks of other methods.

A compressed table has an uncompressed view and an underlying compressed table. The compression dictionary is loaded for each table and cached to improve performance. From the application point of view, the uncompressed view is just a normal table and you shouldn't need to modify the code.

You can select how the compression groups the data, which can improve performance. For example, instead of grouping a fixed number of rows, you can compress groups of records based on dates or even just fix a single dictionary, which can be useful for tables that never change.

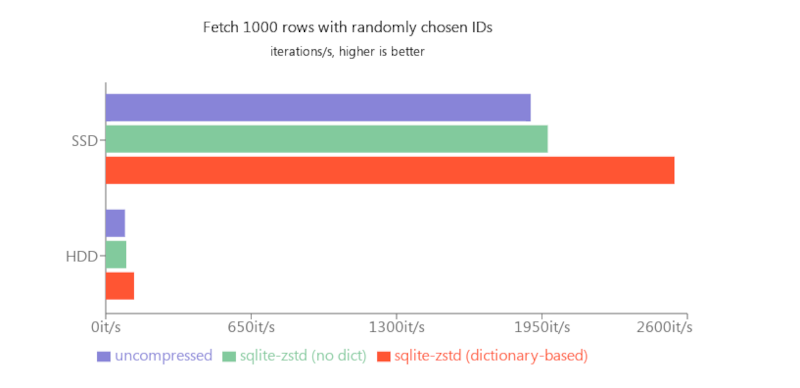

Speaking of performance, the decompression happens on the fly, but the compression and dictionary creation happens in the background when the database is idle. Benchmarks of course show a drop in performance, but that's still the case: you're trading speed for space. On the other hand, for random access, it is actually faster to use compressed tables since there is less data to read. Random updates, however, were slower even though compression was not happening at the time.

If you want to get started quickly using SQLite, there's a Linux Fu for that. You can even use versioning with a Git-like system, another advantage over traditional files.

We're big fans of using SQLite for anything even of moderate complexity where you might otherwise use a file. The benefits are many, but sometimes you want to rely on file storage. [Phiresky] has a great answer to this: the sqlite-zstd extension provides transparent row-level compression for SQLite.

Of course, there are other options, but as the article mentions, each of them has its drawbacks. However, by compressing each row of an array, you can retain random access without some of the drawbacks of other methods.

A compressed table has an uncompressed view and an underlying compressed table. The compression dictionary is loaded for each table and cached to improve performance. From the application point of view, the uncompressed view is just a normal table and you shouldn't need to modify the code.

You can select how the compression groups the data, which can improve performance. For example, instead of grouping a fixed number of rows, you can compress groups of records based on dates or even just fix a single dictionary, which can be useful for tables that never change.

Speaking of performance, the decompression happens on the fly, but the compression and dictionary creation happens in the background when the database is idle. Benchmarks of course show a drop in performance, but that's still the case: you're trading speed for space. On the other hand, for random access, it is actually faster to use compressed tables since there is less data to read. Random updates, however, were slower even though compression was not happening at the time.

If you want to get started quickly using SQLite, there's a Linux Fu for that. You can even use versioning with a Git-like system, another advantage over traditional files.

What's Your Reaction?

![Three of ID's top PR executives quit ad firm Powerhouse [EXCLUSIVE]](https://variety.com/wp-content/uploads/2023/02/ID-PR-Logo.jpg?#)