Controlling a bionic hand with tinyML keyword detection

Traditional methods of sending motion commands to prostheses often include electromyography (reading electrical signals from muscles) or simple Bluetooth modules. But in this project, Ex Machina has developed an alternative strategy that allows users to use voice commands and perform various gestures accordingly.

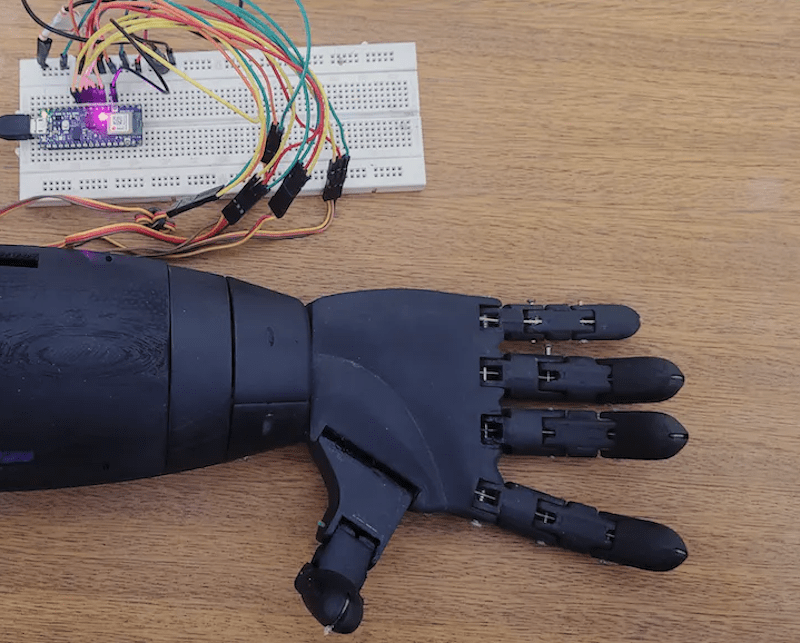

The hand itself was made from five SG90 servo motors, each moving an individual finger of the larger 3D printed hand assembly. They are all controlled by a single Arduino Nano 33 BLE Sense, which collects voice data, interprets gesture, and sends signals to servos and an RGB LED to communicate the action in progress.

In order to recognize certain keywords, Ex Machina collected 3.5 hours of audio data spread over six tags in total that covered the words "one", "two", "OK", "rock ', 'thumbs up', and 'nothing' - all in Portuguese. From there, the samples were added to a project in Edge Impulse Studio and sent through an MFCC processing block for better vocal extraction. Finally, a Keras model was trained on the resulting features and yielded 95% accuracy.

Once deployed to the Arduino, the model continuously receives new audio data from the built-in microphone so that it can deduce the correct label. Finally, a switch statement sets each servo to the correct angle for the gesture. For more details on the voice-activated bionic hand, you can read Ex Machina's Hackster.io article here.

Traditional methods of sending motion commands to prostheses often include electromyography (reading electrical signals from muscles) or simple Bluetooth modules. But in this project, Ex Machina has developed an alternative strategy that allows users to use voice commands and perform various gestures accordingly.

The hand itself was made from five SG90 servo motors, each moving an individual finger of the larger 3D printed hand assembly. They are all controlled by a single Arduino Nano 33 BLE Sense, which collects voice data, interprets gesture, and sends signals to servos and an RGB LED to communicate the action in progress.

In order to recognize certain keywords, Ex Machina collected 3.5 hours of audio data spread over six tags in total that covered the words "one", "two", "OK", "rock ', 'thumbs up', and 'nothing' - all in Portuguese. From there, the samples were added to a project in Edge Impulse Studio and sent through an MFCC processing block for better vocal extraction. Finally, a Keras model was trained on the resulting features and yielded 95% accuracy.

Once deployed to the Arduino, the model continuously receives new audio data from the built-in microphone so that it can deduce the correct label. Finally, a switch statement sets each servo to the correct angle for the gesture. For more details on the voice-activated bionic hand, you can read Ex Machina's Hackster.io article here.

What's Your Reaction?

![Three of ID's top PR executives quit ad firm Powerhouse [EXCLUSIVE]](https://variety.com/wp-content/uploads/2023/02/ID-PR-Logo.jpg?#)