Nvidia to Share New Details on Grace CPU, Hopper GPU, NVLink Switch, Jetson Orin

During four lectures spread over two days, experienced NVIDIA engineers will describe innovations in accelerated computing for modern data centers and systems at the network edge.

Speaking at a virtual Hot Chips event, an annual gathering of processor and system architects, they will divulge performance figures and other technical details for NVIDIA's first server processor, the GPU Hopper, the latest version of the NVSwitch interconnect chip and the NVIDIA Jetson Orin System on Module (SoM).

The presentations provide new insights into how the NVIDIA platform will achieve new levels of performance, efficiency, scalability, and security.

Specifically, the discussions demonstrate a design philosophy of innovating across the full stack of chips, systems, and software where GPUs, CPUs, and DPUs act as peer processors. Together, they create a platform that already runs AI, data analytics, and high-performance computing across cloud service providers, supercomputing centers, enterprise data centers, and stand-alone systems.

Inside NVIDIA's first server processorData centers require flexible clusters of CPUs, GPUs, and other accelerators sharing huge pools of memory to deliver the power-efficient performance that today's workloads demand.

To address this need, Jonathon Evans, eminent engineer and 15-year veteran of NVIDIA, will describe the NVIDIA NVLink-C2C. It connects CPUs and GPUs at 900 gigabytes per second with 5 times the power efficiency of the existing PCIe Gen 5 standard, thanks to data transfers that consume only 1.3 picojoules per bit.

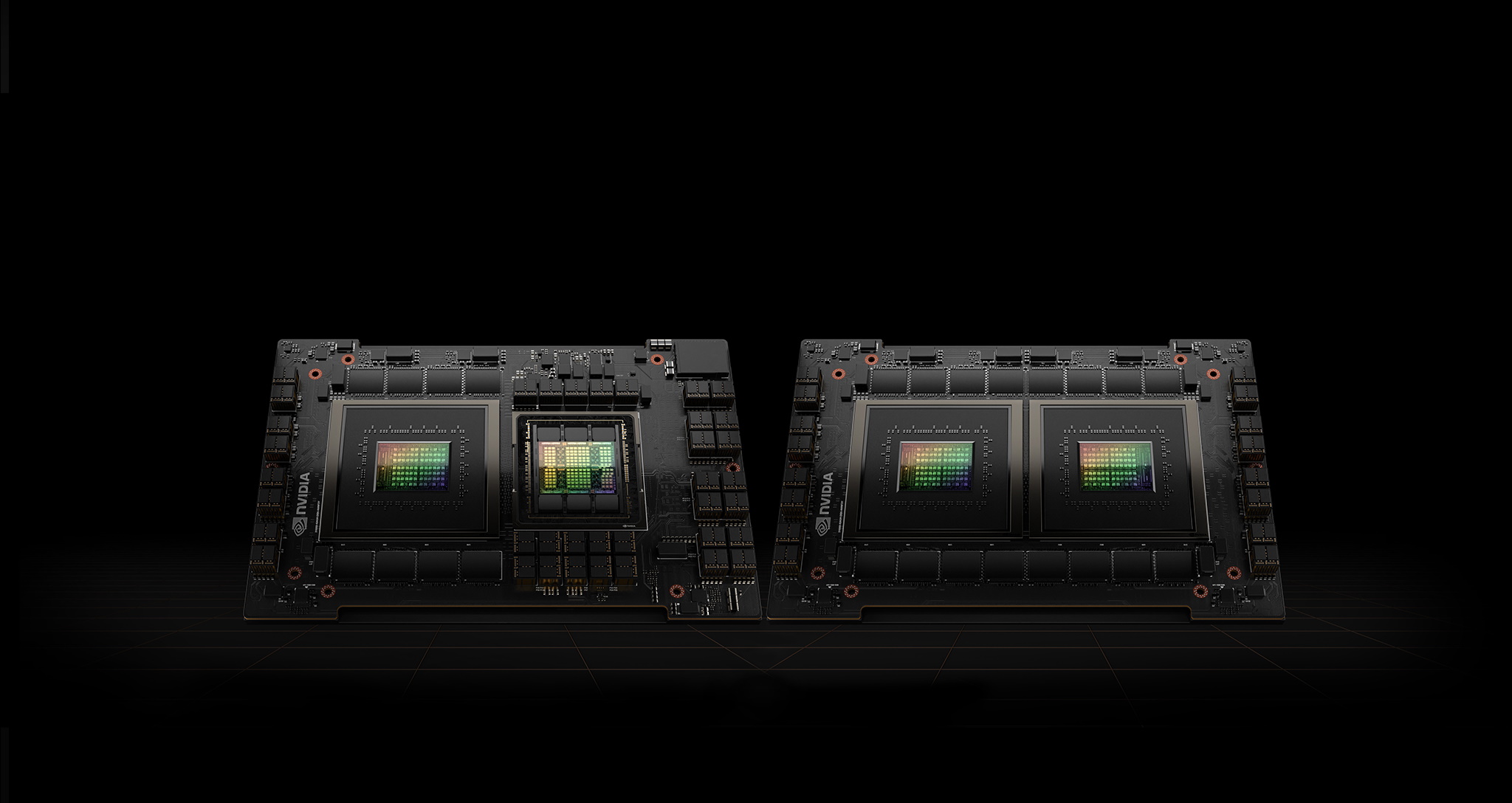

NVLink-C2C connects two CPU chips to create the NVIDIA Grace processor with 144 Arm Neoverse cores. This is a processor designed to solve the world's most important computing problems.

For maximum efficiency, the Grace processor uses LPDDR5X memory. It achieves one terabyte per second of memory bandwidth while keeping the power consumption of the entire complex at 500 watts.

One Link, Many UsesNVLink-C2C also bridges Grace CPU and Hopper GPU chips as memory sharing pairs in the NVIDIA Grace Hopper Superchip, providing maximum acceleration for performance-intensive tasks such as AI training.

Anyone can create custom chiplets using NVLink-C2C to seamlessly connect to NVIDIA GPUs, CPUs, DPUs, and SoCs, expanding this new class of embedded products. The interconnect will support AMBA CHI and CXL protocols used by Arm and x86 processors respectively.

During four lectures spread over two days, experienced NVIDIA engineers will describe innovations in accelerated computing for modern data centers and systems at the network edge.

Speaking at a virtual Hot Chips event, an annual gathering of processor and system architects, they will divulge performance figures and other technical details for NVIDIA's first server processor, the GPU Hopper, the latest version of the NVSwitch interconnect chip and the NVIDIA Jetson Orin System on Module (SoM).

The presentations provide new insights into how the NVIDIA platform will achieve new levels of performance, efficiency, scalability, and security.

Specifically, the discussions demonstrate a design philosophy of innovating across the full stack of chips, systems, and software where GPUs, CPUs, and DPUs act as peer processors. Together, they create a platform that already runs AI, data analytics, and high-performance computing across cloud service providers, supercomputing centers, enterprise data centers, and stand-alone systems.

Inside NVIDIA's first server processorData centers require flexible clusters of CPUs, GPUs, and other accelerators sharing huge pools of memory to deliver the power-efficient performance that today's workloads demand.

To address this need, Jonathon Evans, eminent engineer and 15-year veteran of NVIDIA, will describe the NVIDIA NVLink-C2C. It connects CPUs and GPUs at 900 gigabytes per second with 5 times the power efficiency of the existing PCIe Gen 5 standard, thanks to data transfers that consume only 1.3 picojoules per bit.

NVLink-C2C connects two CPU chips to create the NVIDIA Grace processor with 144 Arm Neoverse cores. This is a processor designed to solve the world's most important computing problems.

For maximum efficiency, the Grace processor uses LPDDR5X memory. It achieves one terabyte per second of memory bandwidth while keeping the power consumption of the entire complex at 500 watts.

One Link, Many UsesNVLink-C2C also bridges Grace CPU and Hopper GPU chips as memory sharing pairs in the NVIDIA Grace Hopper Superchip, providing maximum acceleration for performance-intensive tasks such as AI training.

Anyone can create custom chiplets using NVLink-C2C to seamlessly connect to NVIDIA GPUs, CPUs, DPUs, and SoCs, expanding this new class of embedded products. The interconnect will support AMBA CHI and CXL protocols used by Arm and x86 processors respectively.

What's Your Reaction?

![Three of ID's top PR executives quit ad firm Powerhouse [EXCLUSIVE]](https://variety.com/wp-content/uploads/2023/02/ID-PR-Logo.jpg?#)